Advancing AI Integration in Clinical Decision-Making

A national healthcare organisation sought to integrate artificial intelligence (AI) into its clinical decision-making processes to enhance patient referral efficiency and reduce staff workload. Effective referral and treatment prioritisation—based on the urgency of medical needs—is essential in an environment where healthcare resources are limited, and the COVID-19 pandemic has further strained capacity. AI technologies, particularly autonomous computer programmes (ACPs), are well-suited to making rapid, data-driven decisions thanks to their computational power.

However, initial consultations revealed clinician resistance to adopting these technologies, citing concerns about increased interaction with digital systems. Anticipated resistance from patients and the broader public—key stakeholders in national healthcare services—was also a concern. Public engagement with digital health services will ultimately determine the pace and success of autonomous technology adoption.

Understanding Barriers to AI Acceptance

In a research consultancy project funded jointly by the organisation and Innovate UK, our team was tasked with identifying the market potential and behavioural barriers to AI adoption. We aimed to develop targeted recommendations that would support behaviour change and build trust in AI technologies.

We began with a comprehensive literature review, focusing on existing technologies such as e-triage systems, which use machine learning to assess the urgency of care needs in emergency departments. We also explored research on AI acceptance and the phenomenon of algorithm aversion.

Our review found that people often prefer human clinicians over autonomous computer programmes (ACPs)—even when told that ACP performance is equivalent or superior. This preference appears rooted in concerns about the lack of personalisation in ACP decisions and the perception that AI treats individuals as “average” rather than unique. In healthcare, where decisions carry ethical weight, the perceived absence of a “mind” in ACPs—lacking the capacity for reasoning, planning, or empathy—can fuel discomfort.

Although ACPs can generate decision rules and draw inferences from data, they are not typically seen as capable of emotional engagement or moral reasoning. These human-like qualities—such as agency (the ability to reason and act intentionally) and emotional experience—are considered vital in making morally sensitive decisions.

Novel findings

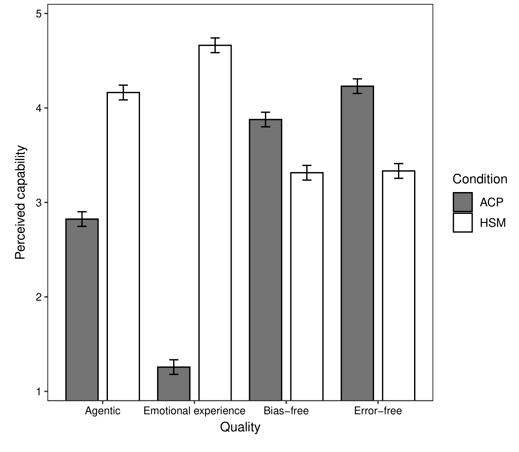

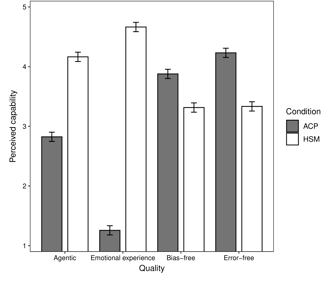

Our findings identified four key dimensions by which both ACPs and human staff members (HSMs) are judged: Agency, Emotional experience, Bias-free decision-making, Error-free decision-making. HSMs were seen as stronger in the first two human-centric qualities, while ACPs were rated higher in the latter two, associated with technical performance. To measure these perceptions systematically, we developed and validated the Healthcare Decision Quality (HDQ) scale, a tool for future research and policy planning.

Key Insights and Recommendations

Our research demonstrates that while ACPs are not perceived to possess all the attributes of human clinicians, their advantages in data processing and decision accuracy can drive acceptance—if effectively communicated. By emphasising the unique strengths of ACPs, especially their reliability and efficiency, healthcare organisations can begin to build public trust and overcome resistance.

We recommended the organisation to communicate the strengths of ACPs, particularly their error-reducing capabilities. It is important also to acknowledge public concerns around empathy and individualisation to avoid resistance. Performance comparisons can show where ACPs outperform human decision-makers, which as our research shows, is persuasive to the public.

Overcoming Barriers and Building Trust in AI

Despite these concerns, AI systems offer substantial advantages. Deep learning tools can triage patients by analysing datasets from millions of cases, identifying those most at risk. Unlike human staff, ACPs are not prone to random clerical errors and may be seen as more objective—less susceptible to bias based on age, gender, ethnicity, or other factors (although data-driven models can replicate societal biases if not carefully managed).

We employed a mixed-methods approach to explore public perceptions of ACP capabilities. This included focus groups, open-ended survey responses, and a novel judgement task to assess the value people place on various qualities in healthcare decision-making.

Adopting hybrid decision models, where AI supports but does not replace clinicians, may be an effective transitional strategy. Finally, by aligning the design, communication, and deployment of AI technologies with public values and expectations, healthcare systems can advance toward ethical, effective, and trusted AI adoption.

Importantly, although people generally preferred human-made decisions, ACP performance that exceeded human levels—especially in being error-free—was sufficient to reverse these preferences. This suggests that people may be willing to accept autonomous systems under the right conditions.

References

Rolison, J. J., Gooding, P. L., Russo, R., & Buchanan, K. E. (2024). Who should decide how limited healthcare resources are prioritized? Autonomous technology as a compelling alternative to humans. PlOS One, 19, e0292944. https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0292944

Insight and Impact

Transforming research into actionable solutions.

Email: jonathan.rolison@outlook.com

© 2025. All rights reserved.

Contact